Few companies are starting to pave the way for Application and Detection Response (ADR) including Oligo Security, RevealSecurity and Miggo Security. You may find yourself quickly lost in understanding what these solutions aim to tackle. First, each of these solutions likely focuses on what they do best. For example, Oligo

Few companies are starting to pave the way for Application and Detection Response (ADR) including Oligo Security, RevealSecurity and Miggo Security. You may find yourself quickly lost in understanding what these solutions aim to tackle. First, each of these solutions likely focuses on what they do best. For example, Oligo uses eBPF observability to detect runtime library deviations, while RevealSecurity establishes a baseline for users and applications and uses machine learning to detect deviations with a clustering engine, judiciously applying UEBA on the application layer.

There will probably be other solutions that will emerge. In this article, I will share, based on my experience with application security and specially RASP (and research :)) , how these solutions differ and how they are likely to evolve.

DevOps, cloud computing, data pipelining, and AI introduce new complexities and thus new security threats and risks. Having a rule-based approach is simply not enough. It's not scalable and requires a deep understanding of numerous technologies. We are moving too fast with features and business capabilities, often leaving application runtime security behind. An attacker will not show mercy when he sees a chance to strike hard, potentially damaging a nation’s financial system.

If there is a vulnerability that needs immediate patching and you have an entire business relying on impacted applications deployed on your cloud workload, what do you do? Do you frantically try to patch all applications at once (potentially thousands), which could take days or hours, giving attackers a chance to take advantage in the meantime? Or do you shut down the entire service at the cost of losing your customers and money? A choice will inevitably need to be made.

We need to cope with a wide array of attacks that we simply can't control.

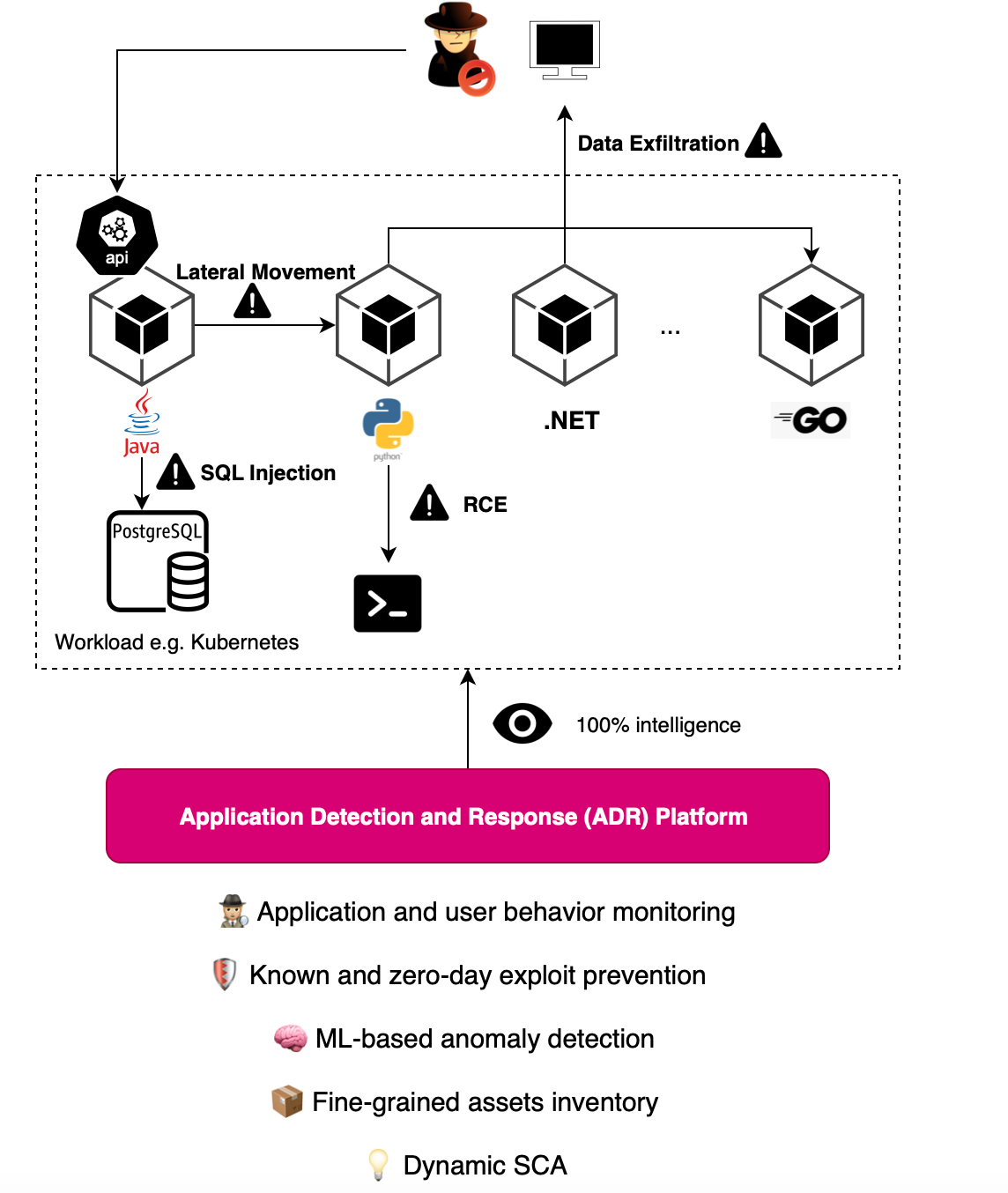

Before we were capable of examining traffic levels with Web Application Firewall (WAF). EDR or Endpoint Detection and Response focused on the process level and protected the host. Now, ADR adds another dimension; the gold dimension where 100% of intelligence resides.

WAFs are easily bypassed, for example, using encoding tricks. EDR can protect applications to a certain extent, but the informations available to EDRs is relatively limited. Its role extends to cover other workloads, including containers, VMs. However, there is a lag in application security. And this is where ADRs come into play.

Imagine having an immune system where, despite numerous viruses that could harm your system, you just can’t get sick. This immunity might be acquired through injections of an immunity booster. Now, you can walk freely without worrying about viruses because you have been injected with this serum. This is what RASP (Runtime Application Self-Protection) aims to emulate.

RASP offers the capability to survive unpatched vulnerabilities at the cost of minimal performance overhead and some expense. However, the way RASP is implemented today is very complex, or at least not everyone is implementing it properly and comprehensively.

RASP is just a term coined to say we are using the in-app context and empower application to self-protect without relying on perimeter oriented technologies like WAFs.

Application Detection and Responses, or ADRs, are build on the foundation of RASP. They don't need to be implemented exactly like traditional solutions but aim to complete what RASP has already achieved and shown to be incredibly effective. This includes monitoring everything happening in the application and blocking attacks in real-time, context is king.

Some people mistakenly think of RASP as the next-generation WAF, but they are not the same. A WAF sits on the front line of web applications and likely blocks most malicious obvious traffic. RASP, however, handles situations that require more context.

WAFs have been used for many years as essential perimeter security technology, with almost every organization having a WAF (e.g. Cloudflare) guarding their traffic. A WAF can’t detect everything, which is normal. Imagine sitting next to a bank and ensuring that no one entering intends to rob it, based solely on the items they carry.

Business growth and innovative technologies are emerging at a dizzying pace, and you can’t just expect that the application you are shipping is free from backdoors or does not have an exploitable zero-day vulnerability leading to arbitrary code execution.

Most recent zero-days typically exploit a gap that one might expect from a library, granted with the same permissions as the main application code (Full Trust hehe). An attacker can implant a backdoor and interact with a plethora set of libraries and applications.

Almost every attack triggers abnormal library or package behavior, which is where RASP proves powerful, as it has the intelligence needed to take action and block malicious behaviors. Yet, not all vulnerabilities deviate from a baseline; one might exploit a broken access control vulnerability and access valuable resources without RASP’s oversight.

This is why we need bigger lenses, and exactly why (I believe) ADRs were primarily introduced.

ADRs not only protect your applications against zero-day and known vulnerabilities, regardless of the technology (in contrast to RASP which is closely tied to the underlying tech), but they also enhance capabilities to detect other types of attacks that are too complex for traditional methods.

Typically, a baseline is established to better understand what constitutes normal or benign behavior. Then, any deviation from this baseline would be reported. Often, it is not just one action that is concerning but rather a sequence of actions leading to a command that can signal an attack. Additionally, ADRs are designed with supply chain and distributed application architecture awareness in mind.

Machine Learning is a perfect fit for ADRs, enabling application baseline learning, access behavior monitoring, and anomaly detection, typically through a sequence-based approach (APIs accessed, system calls...).

ADRs use agents (whether RASP-based or not, again RASP is not the problem, but the way it is implemented) to collect in-app context, assets, and API usage, creating a baseline profile for different applications in addition to guarding against known vulnerabilities, such as SQL and Command Injection (typically OWASP Top 10).

With ADRs, you can comprehend the risks you face, identify your most vulnerable applications, and address issues like misconfigurations, unused ports, and excessive privileges (this was not covered by RASP).

By monitoring both user and kernel space, a behavior-based model or profile is created, because we all know that once an application is compromised, it starts behaving unusually, right? Well, not always or at least it depends from where you look at it. Not all attacks involve system calls; one may use memory-related attacks, e.g., disabling application-specific security mechanisms (e.g. for java one can use Instrumentation API through agents deployed in the app + disable Spring Security) to use freely sensitive functionality of the application.

ADRs represent the continuation of what RASP started, trading a bit of performance overhead for application runtime security.

Finally, ADRs typically offer fine-grained asset management by tracking which application libraries are loaded and in use, frameworks, allowing you to determine within seconds if a supply chain security vulnerability impacts you or not.